We have seen that composing shallow networks can give us complex functions. We can extend this to construct deep networks with more than two hidden layers; modern networks have hundreds of layers with thousands of hidden units at each layer.

- The number of hidden units in each layer is referred to as the width of the network

- The number of hidden layers is the depth

- The total number of hidden units is a measure of network capacity

Hyperparameters

We denote the number of layers as and the number of hidden units in each layer as . These are examples of hyperparameters. They are quantities chosen before we learn the model parameters (i.e., the slope and intercept terms). For fixed hyperparameters (e.g., and hidden units each), the model describes a family of functions, and the parameters determine the particular function. Hence, when we also consider the hyperparameters, we can think of neural networks as representing a family of families of functions relating input to output.

General Formulation

- See Matrix Notation for an introduction to matrix notation for a simple composition of shallow networks

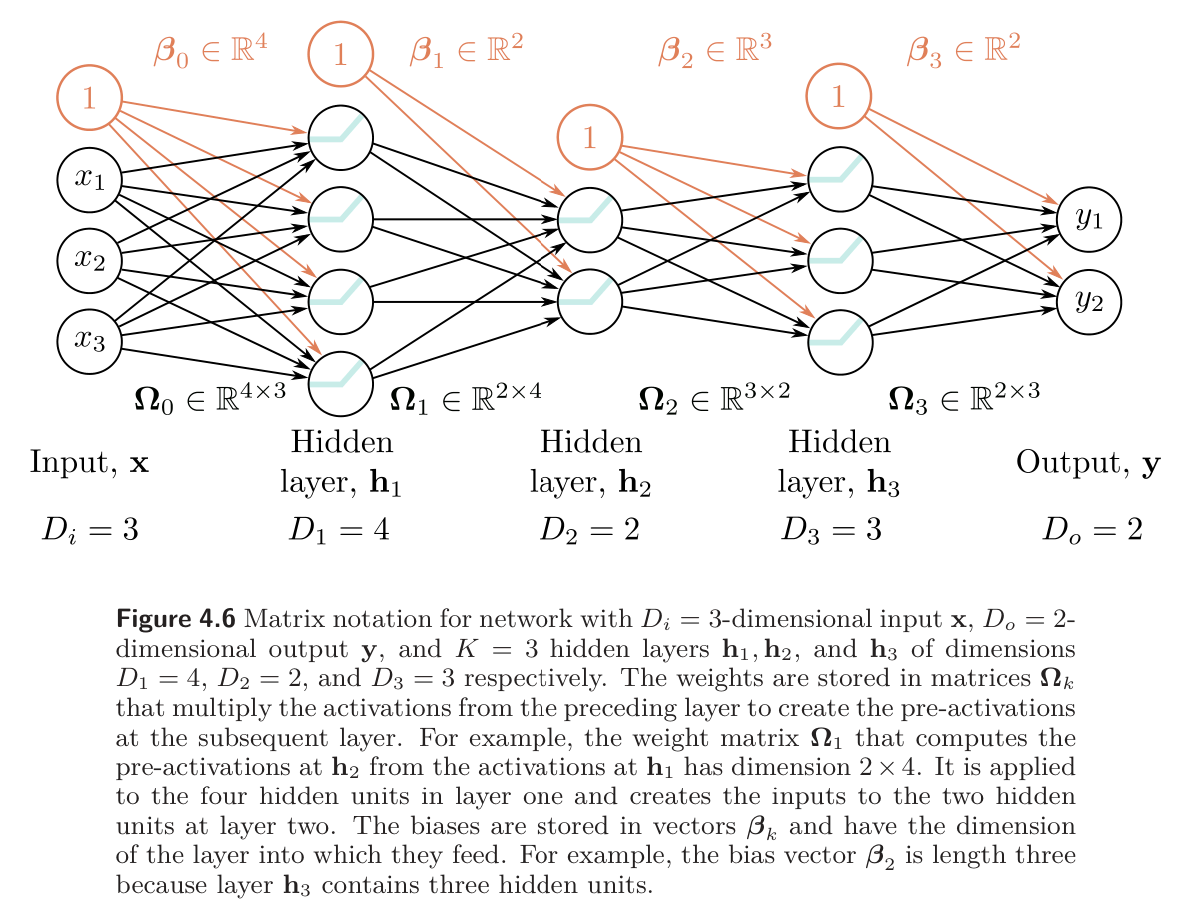

We describe the vector of hidden units at layer as , the vector of biases (intercepts) that contribute to hidden layer as , and the weights (slopes) that are applied to the -th layer and contribute to the -th layer as . A general deep network with layers can now be written as:

The parameters of this model comprise all of these weight matrices and bias vectors .

- If the -th layer has has hidden units, then the bias vector will be of size . The last bias vector has the size of the output.

- The first weight matrix has size where is the size of the input.

- The last weight matrix is , and the remaining matrices are

We can equivalently write the network as a single function:

In diagram form:

Clarification on terminology

- Layer is the operation

- The activations is the result we get as a result of applying the operation layer .

- However, “layer” is used to refer to “layer ‘s activations” which can be confusing.

Example Computation

Let’s consider a numeric example of the above. We use a training input of

Layer 1: We first have that maps the input () to the first hidden layer (), and a corresponding bias vector :

Then, the first pre-activation is:

Passing through ReLU to get to the complete first hidden layer:

Layer 2: Now we have that maps the first hidden layer () to the second hidden layer (), and a corresponding bias vector :

Then, the pre-activation is:

Passing through ReLU:

Layer 3: Now, :

and we compute the pre-activation with:

Then, applying ReLU to get the activations of the third hidden layer:

Output layer: Finally, and :

We use these to compute the output pre-activation: