A popular iterative method for root finding.

Steps

- Write function in the form .

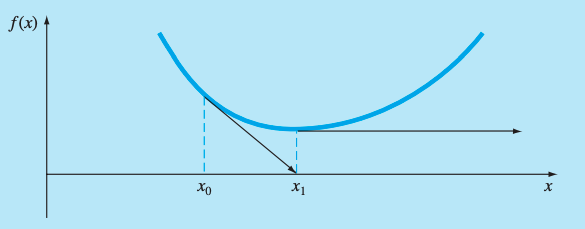

- Choose near root

- Find , slope of at

- Extend to -axis to find such that:

- Repeats steps 2 and 3.

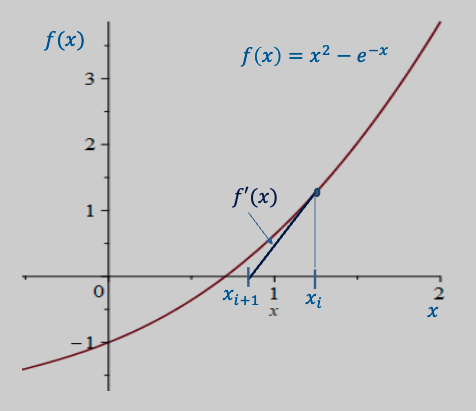

Example

Let us try to solve .

We have:

So:

Thus we have:

| iteration | |||

|---|---|---|---|

| 1 | 1 (initial) | 0.73304 | |

| 2 | 0.73304 | 0.70384 | |

| 3 | 0.70384 | 0.7034675 | |

| 4 | 0.7034675 | 0.703674 |

Errors and Termination

Like other approaches, the approximate relative error can be used to monitor convergence:

Convergence Monitoring with

When the termination criterion is achieved, you should always substitute the calculated root back into the original equation to determine if the result is close to zero. This guards against slow convergence or oscillation, where the approximate error may be small but the solution is far from the root.

Newton-Raphson has some interesting properties: it’s quadratically convergent, such that its absolute true error is approximately proportional to the square of the previous error:

This means:

- It can be very slow

- The number of correct decimal places approximately doubles for each iteration.

Also, you can’t predict the number of iterations to convergence unlike bracketing because the function is dependent on initial values.

Challenges

-

Slow convergence due to quadratic nature.

-

Can diverge if initial value not chosen properly.

-

Cannot handle multiple roots.

-

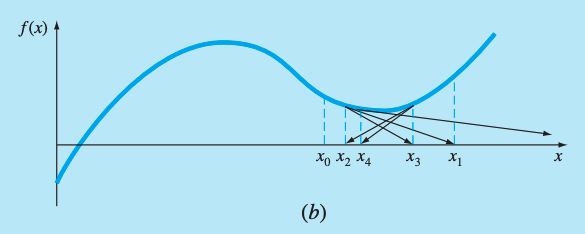

Inflection points (such that ) near the root. Note that iterations beginning at progressively diverge from the root.

-

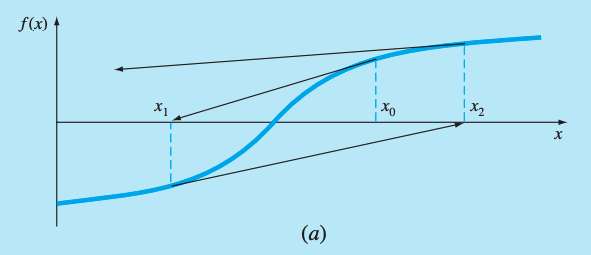

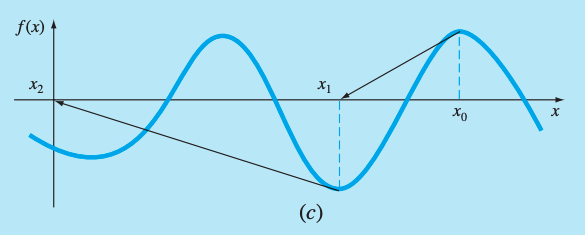

Tends to oscillate around a local maximum or minimum. These oscillations may persist for many iterations.

-

When a near-zero slope is reached, where the next is sent far from the area of interest. An initial guess close to one root in the image above jumped to a location several roots away.

-

A zero slope of would be a true disaster as it results in division by zero. Graphically, the solution would shoot off horizontally and never hit the -axis (shown below).