This is an example of Gradient Descent in one dimension. Expanded to multiple dimensions in Multiple Dimension Gradient Descent

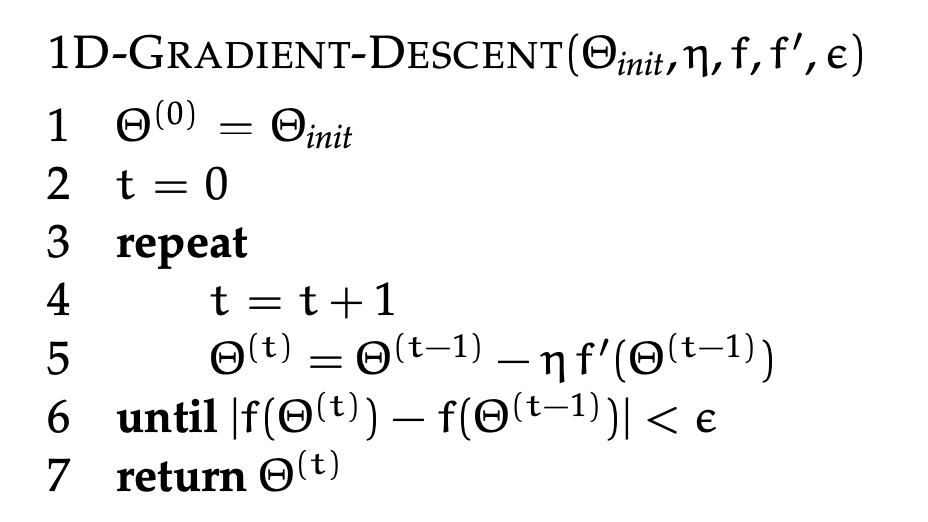

Let’s say we have some arbitrary function . We specify an initial value for parameter , a step-size parameter , and an accuracy parameter . Then, the 1D gradient descent algorithm is:

This algorithm terminates when the change in the function is sufficiently small (less than ). This is similar to the convergence criterion used in Numerical Methods. There are also other options on when to terminate this algorithm, such as:

- Stop after a fixed number of iterations,

- Stop when the change in the value of parameter is sufficiently small, when

- Stop when the derivative at the latest value of is sufficiently small, when

Convergence

Step Size

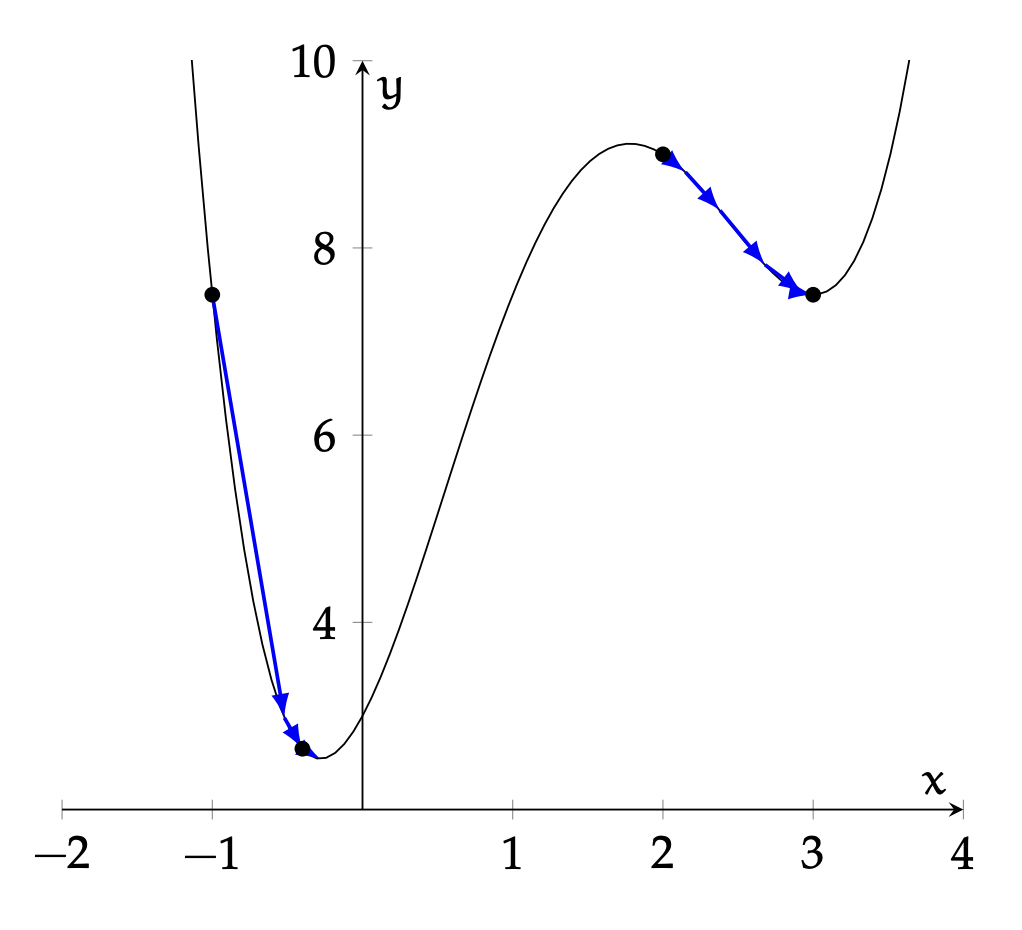

We have to choose the step size carefully; too small of a value will mean convergence is slow, and too big may cause oscillation around the minimum.

Local vs. Global Minimums

Another thing we have to think about is absolute/global vs. local minima; our function may find a local minima instead of the absolute one.

Theorem

If is convex, for any desired accuracy , there is some step size such that gradient descent will converge to within of the optimal .

For non-convex functions, the point of convergence depends on . When we reach a where , it is a local minimum. This is shown in the function below.

This method reminds me a lot of Newton-Raphson Method