Based on Gradient Descent and the idea of Machine Learning as Optimization in general, we can use gradient descent for Logistic Regression.

We are considering a linear separator defined by , where our hypothesis class or guess is . So, our objective function is:

We use as a constant for convenience to make the differentiation nicer. The idea of using as a regularizer (L2 Regularization) forces the magnitude of the separator to stay small, so that it doesn’t overfit to data.

Finding the gradient of , we have:

Note that will be of shape and will be a scalar (we have separated and in this case).

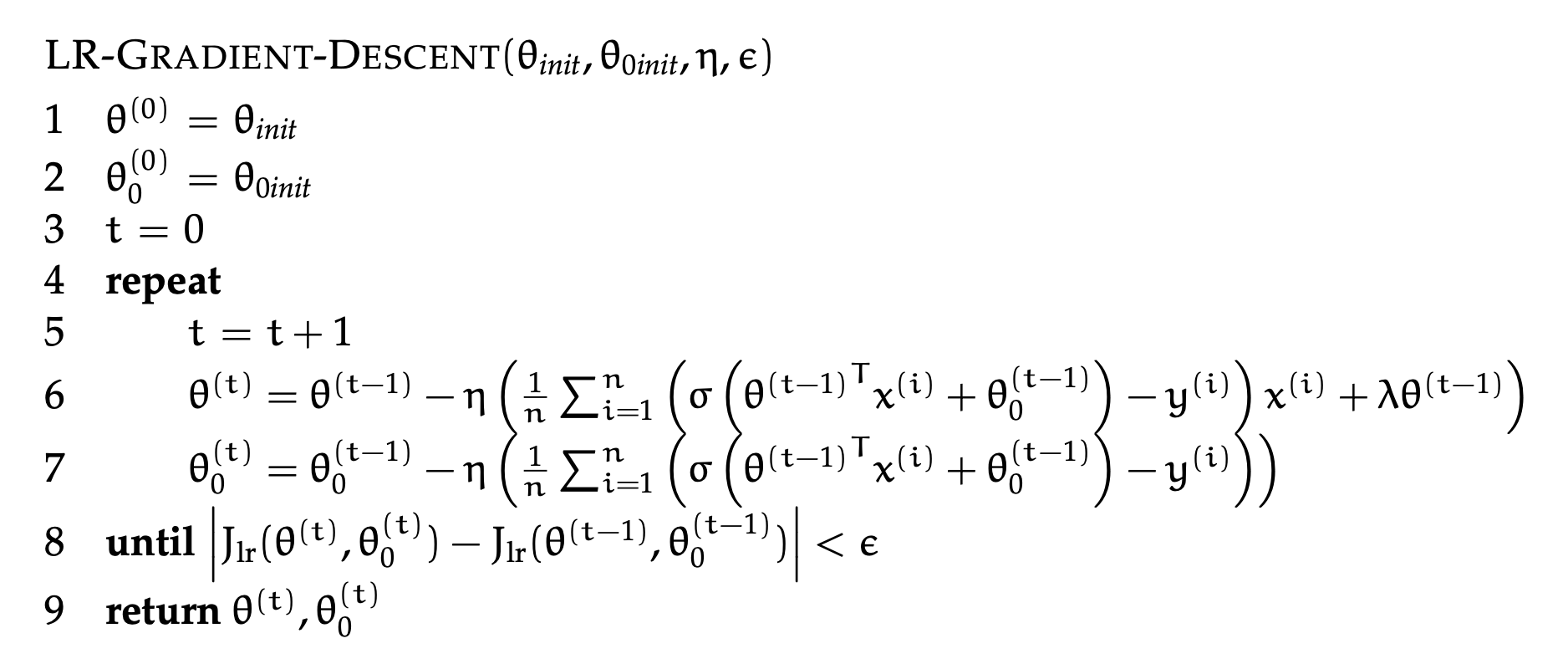

Putting everything together, our gradient descent for logistic regression algorithm becomes: