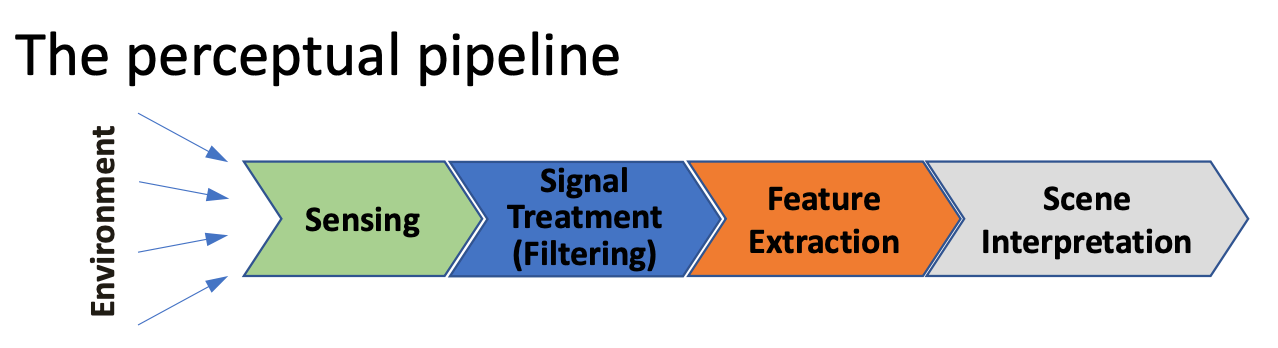

Once we have a sensor image and have optionally filtered it (e.g., smoothed, edges detected), we need to extract useful information from the image. This is where feature extraction happens. Feature extraction or scene interpretation is required for more sophisticated, long-term perceptual tasks

Definition: Feature

Recognizable structure of elements in the environment.

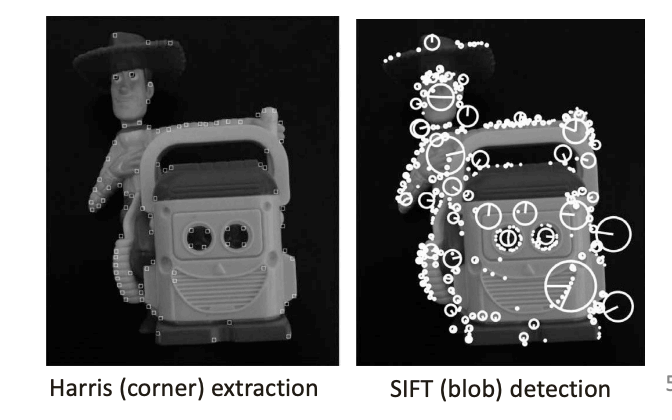

Low-level features:

- First-stage abstraction of raw data (pixels), can be computed directly from pixel patterns

- Geometric primitives such as lines/points, corners, circles, polygons, etc.

- Require no understanding of object identity; just geometric, not semantic

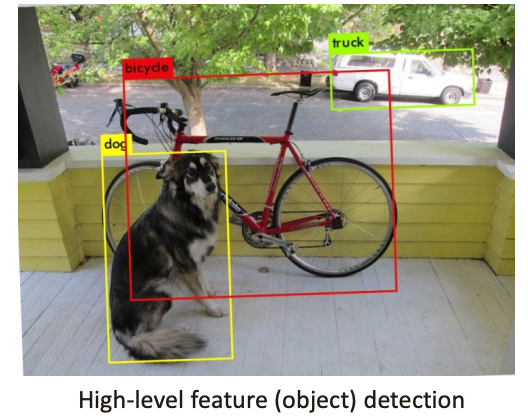

High-level features:

- Maximum abstraction from the raw data

- Objects: doors, tables, or trash cans

- Require another layer of algorithms, e.g. segment/classification

- Also called “semantic features”

The term local feature is also used to mean a small pattern in the image that is distinctive, repeatable, and robust. Some examples could be small image patches, edges, or points. These are also called “interest points”, “interest regions”, or “keypoints”.

Note that features must be distinctive and robustly detectable

- Invariant to changes in conditions, e.g. view point, illumination, scale, etc