- Nice explanation here: https://www.visiondummy.com/2014/03/divide-variance-n-1/

In Gaussian maximum likelihood estimation from a sample, we found that the maximum likelihood solutions for our parameters, and are given as:

These are functions of the data set values, ; we will suppose that each of these values has been generated independently from a Gaussian distribution whose true parameters are and .

Let’s consider the expectations of and with respect to these data set values. We get:

What does this tell us?

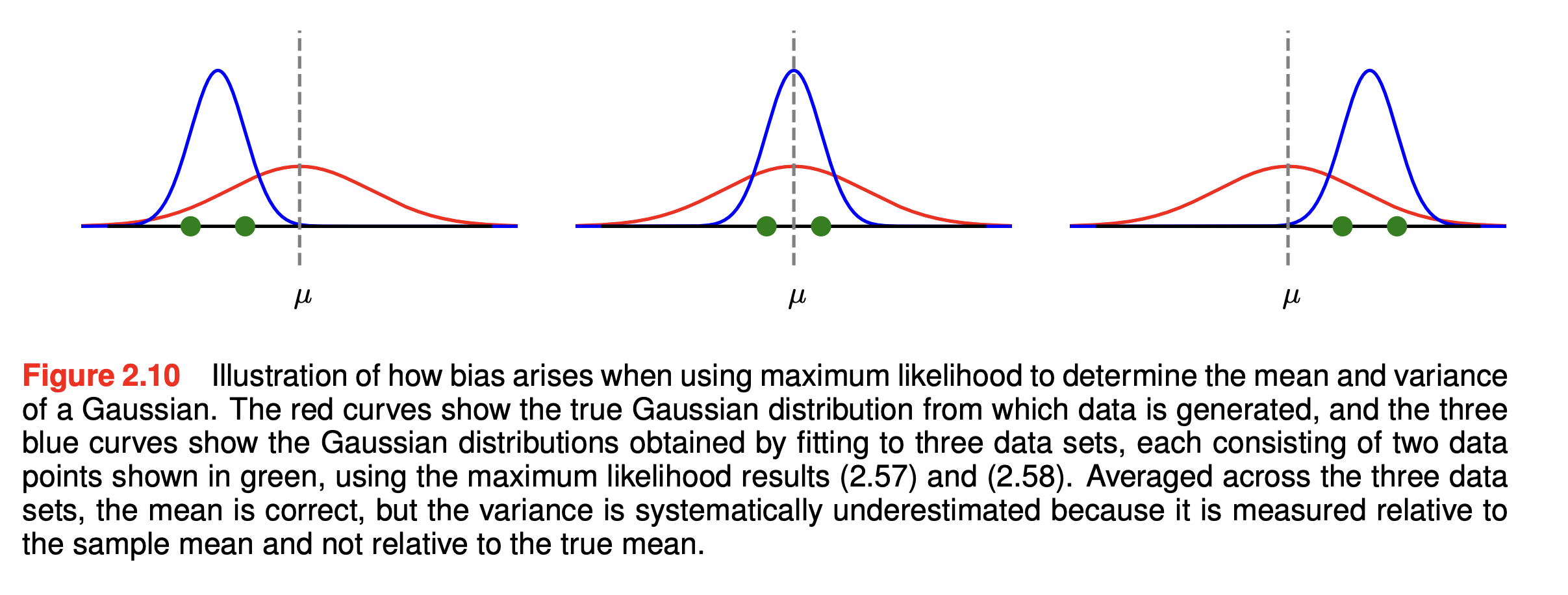

- When averaged over datasets of a given size, the maximum likelihood solution for the mean will equal the true mean.

- However, the maximum likelihood estimate of the variance will underestimate the true variance by a factor .

This is an example of a bias, where the estimator of a quantity is systematically different from the true value.

This bias arises because the variance is measured relative to the maximum likelihood estimate of the mean, which is tuned to the data. If we had access to the true mean and used this to determine the variance of the estimator, we would have an unbiased result:

Of course, we do not have access to the true mean but only to the observed data values. This leads to Bessel’s Correction; for a Gaussian distribution, the following estimate for the variance parameter is unbiased:

Some notes:

- This bias becomes less significant as the number of data points increases

- In the case of the Gaussian, for anything other than small , this bias will not prove to be a serious problem

- The issue of bias in maximum likelihood is closely related to the problem of Overfitting.