Multi-layer neural networks allow us to express more complex hypotheses, as a Neural Network Layer can only really express linear separators. Multi-layer networks neural, as the name suggests, combine multiple layers, most typically by feeding the outputs of one layer into the inputs of another layer.

Nomenclature:

- We use to name a layer.

- is the number of inputs to layer

- is the number of outputs from the layer

- and are of shape and respectively

- is the activation function of layer

Then, the pre-activation outputs are the vector

and the activation outputs the vector

Activation function uniformality

It is technically possible to have different activation functions within the same layer, but for convenience in specification and implementation, we generally have the same activation function within a layer.

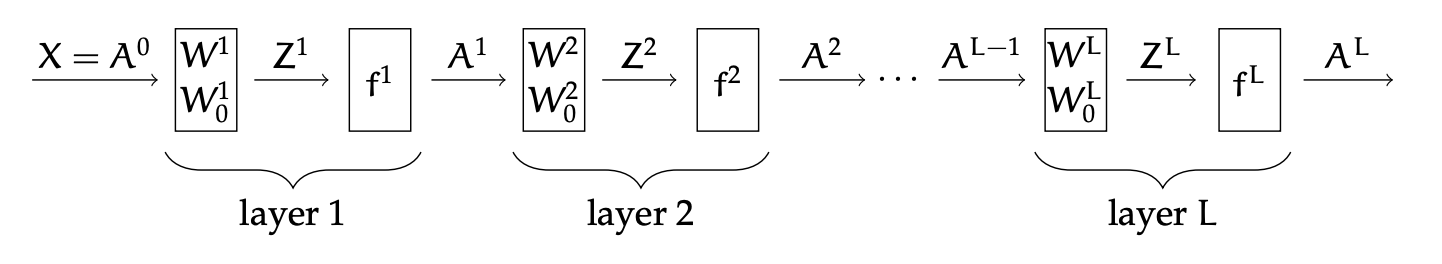

Below is a diagram of a many-layered network, with two blocks for each layer, one representing the linear part of the operation and one representing the non-linear activation function. This structural decomposition is useful to organize our algorithmic thinking and implementation.