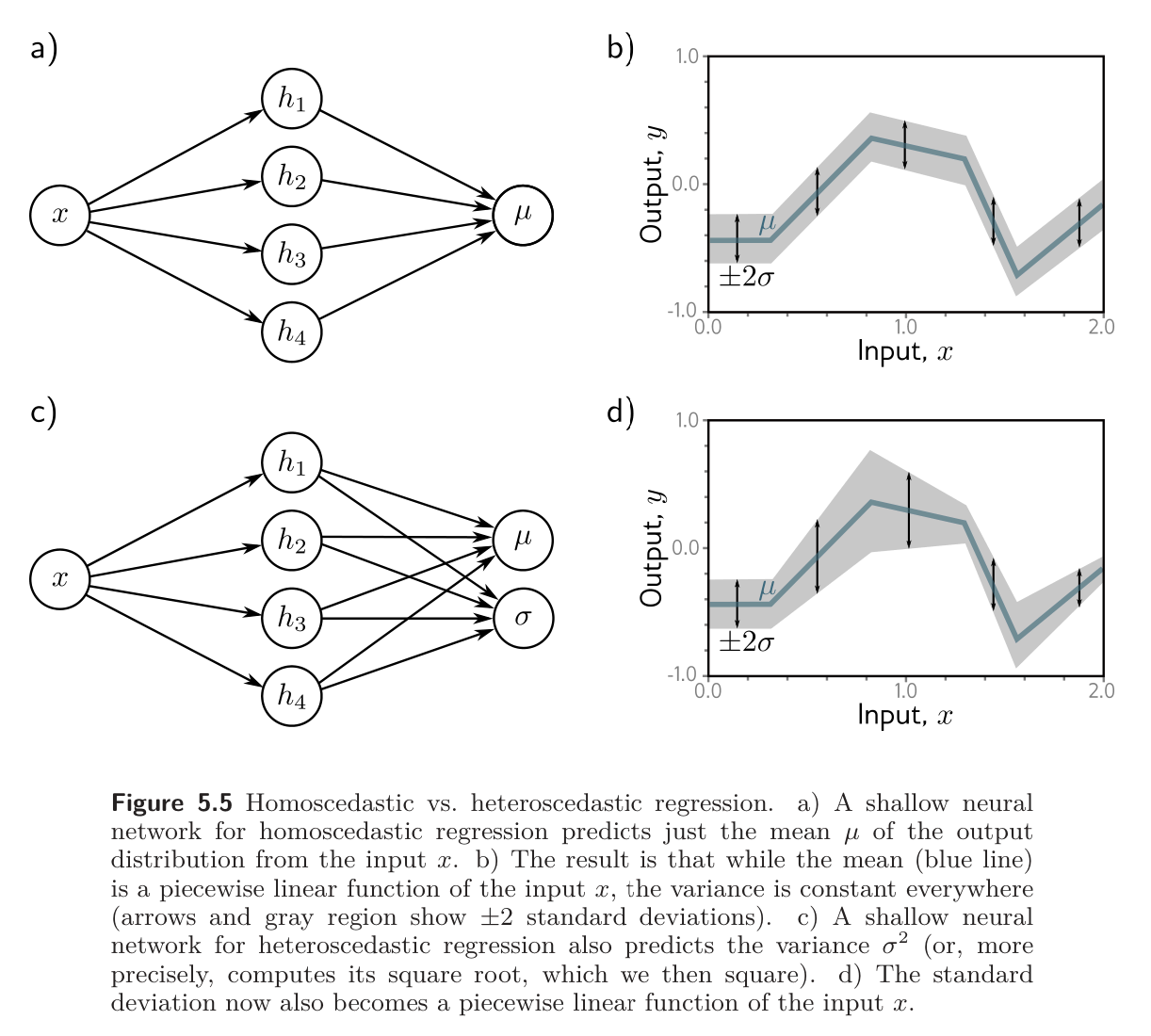

In simple univariate regression, we usually assume that the variance of the data is constant everywhere. However, this might be unrealistic. When the uncertainty of the model varies as a function of the input data, this is called heteroscedastic.

A simple way to model this is to train a neural network that computes both the mean and the variance.

- Example: Consider a shallow network with 2 outputs. We denote the first output as and use this to predict the mean. We denote the second as and use it to predict the variance.

Note that the variance must be positive, but we can’t guarantee that the network will produce a positive output. To ensure that the computed variance is positive, we pass the second network output through a function that maps an arbitrary value to a positive one. A suitable choice is the squaring function, giving

which results in the loss function

when using the same maximum likelihood criterion approach that we used for univariate regression.