In the real world, there are uncertainties in the system dynamic model. Just because we assume something should be constant, like the resistance of a resistor, doesn’t mean it’s actually constant – the resistant may change slightly due to environmental changes.

This uncertainty of the system model is called Process Noise. The process noise produces estimation errors.

The Process Noise Variance is denoted by the letter .

For a constant system, the Covariance Extrapolation/Prediction Equation for constant dynamics would be:

Kalman Filter Equations

Thus, we have the following updated Kalman Filter equations in 1D:

State and Covariance Update

State and Covariance Predict

State

Constant system dynamics:

Constant velocity:

Covariance

Constant system dynamics:

Constant velocity:

Example: Temperature Estimation

We want to estimate the temperature of the liquid in a tank.

We assume that at a steady state, the liquid temperature is constant. However, some fluctuations in the true liquid temperature are possible. The system can be described with:

where:

- is the constant temperature

- is a random process noise with variance

Given info:

- Let’s assume a true temperature of 50 degrees.

- We assume the model is accurate, so we set the process noise variance to .

- The measurement error (standard deviation) is 0.1 degrees Celsius.

- The measurements are taken every 5 seconds.

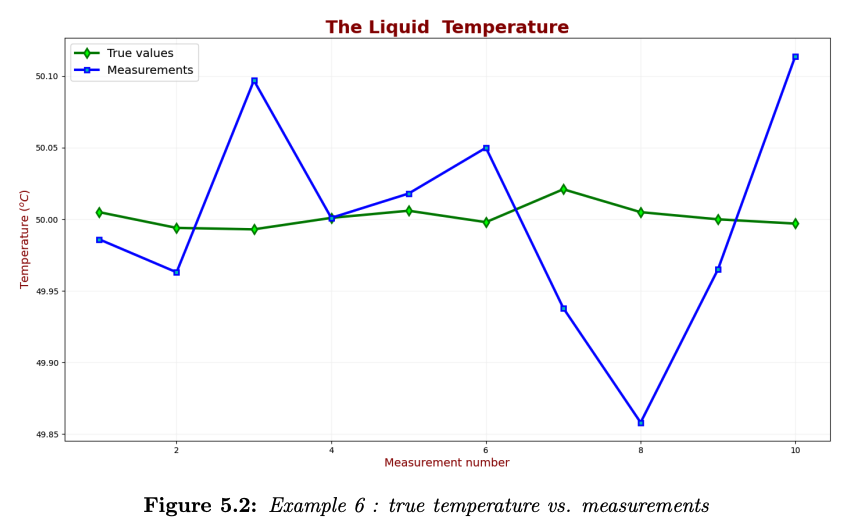

- The true liquid temperature values at the measurement points are: 50.005, 49.994, 49.993, 50.001, 50.006, 49.998, 50.021, 50.005, 50, and 49.997

- The measurements are: 49.986, 49.963, 50.09, 50.001, 50.018, 50.05, 49.938, 49.858, 49.965, and 50.114

The following chart compares the true liquid temperature and the measurements:

Iteration 0

Before the first iteration, we initialize the Kalman Filter and predict the following state.

We start with a guess of . Our guess is imprecise, so we set our initialization estimate error to a large value of . Then, the estimate variance of the initialization is the error variance :

Prediction: Since our model has constant dynamics, the predicted estimate is equal to the current estimate:

The extrapolated estimate variance:

Iteration 1

Measure: We get a measurement value of

Since the measurement error is , the variance is . Thus, the measurement variance is:

Update: The Kalman Gain is

A Kalman Gain of 1 means that rur estimate error is much bigger than our measurement error, so our update will almost completely disregard the previous prediction and just use the measurement.

Estimating the current state:

Updating the current estimate variance:

Predict: Since our system’s dynamic model is constant (the liquid temperature doesn’t change), we have

The extrapolated estimate variance is

Iteration 2

The second measurement is

Since the measurement error is , the variance is . Thus, the measurement variance is

Update: Kalman Gain calculation gives us

The Kalman Gain is around , so the weight of the estimate and the measurement weight are basically equal.

Estimating the current state:

Updating the current estimate variance:

Predict: Since our system’s dynamic model is constant (the liquid temperature doesn’t change), we have

The extrapolated estimate variance is

Results & Analysis

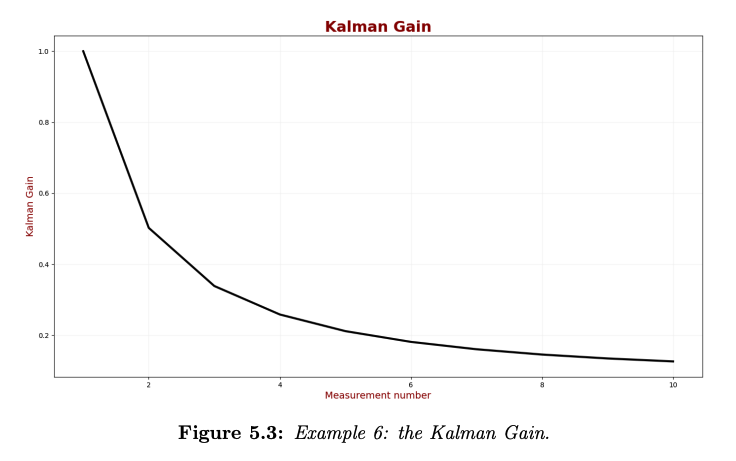

After 10 iterations, we plot the Kalman Gain:

As we can see, the Kalman Gain gradually decreases; therefore, the KF converges.

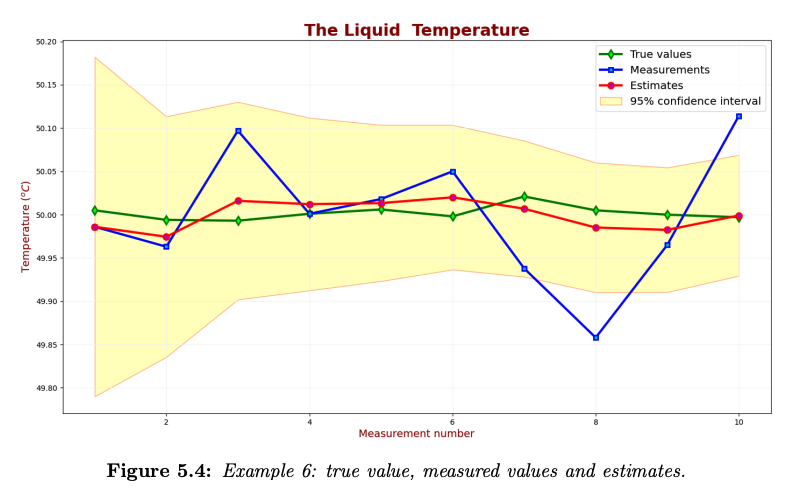

The following chart compares the true value, measured values, and estimates. The confidence interval is .

As you can see, the estimated value converges toward the true value. The KF estimates uncertainties are too high for the 95% confidence level. The yellow area is too broad; the KF is too conservative, thinking that its uncertainty is higher than it actually is, despite the fact that the estimates appear to track the true values fairly well.

Example (Failed): Increasing Liquid Temperature

We want to estimate the temperature of the liquid in the tank. In this case, the dynamic model of the system is not constant – the liquid is heating at a rate of per second.

The Kalman Filter parameters are similar to the previous example:

- We assume that the model is accurate; the process noise variance is set to

- The measurement error (standard deviation) is .

- The measurements are taken every 5 seconds.

- The dynamic model of the system is constant.

Let’s say that:

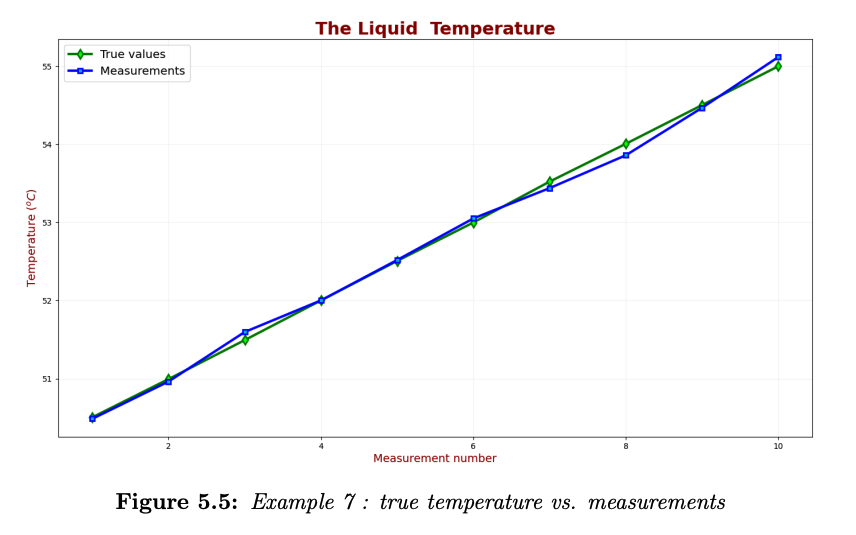

- The true liquid temperatures are: 50.505, 50.994, 51.493, 52.001, 52.506, 52.998, 53.521, 54.005, 54.5, and 54.997.

- The measurements are: 50.486, 50.963, 51.597, 52.001, 52.518, 53.05, 53.438, 53.858, 54.465, and 55.114.

The following chart compares the true liquid temperature and the measurements:

Iteration 0

Before the first iteration, we must initialize the Kalman Filter and predict the following state (which is the first state).

Initialization: We don’t know the true temperature of the liquid in a tank, and our guess is :

Our guess is imprecise, so we set our initialization estimate error to 100. The estimate variance of the initialization is the error variance, :

Prediction: We predict the next state based on the initialization values. Since our model has constant dynamics, the predicted estimate is equal to the current estimate:

The predicted estimate variance:

Results

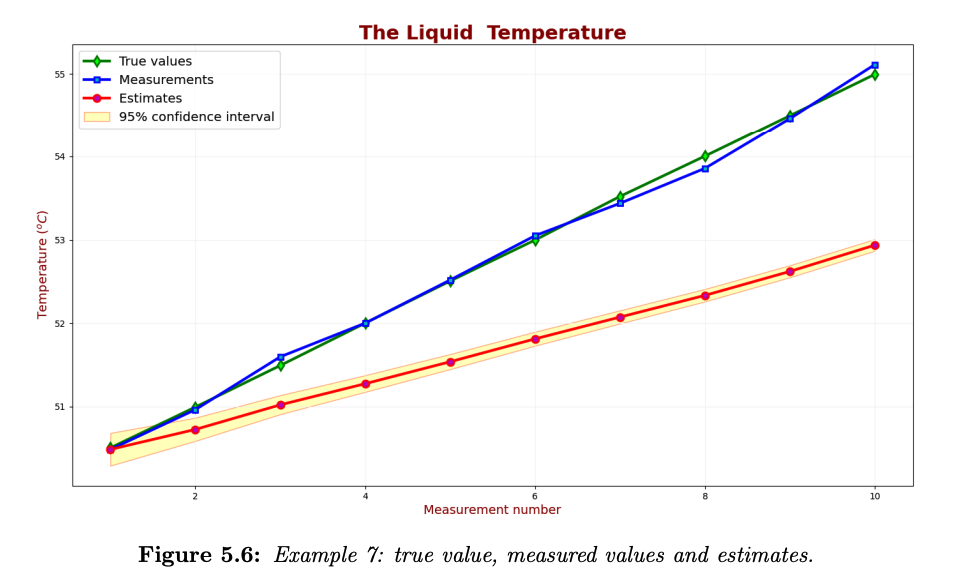

The following chart compares the true value, measured values and estimates over 10 iterations:

As we can see, the Kalman Filter has failed to provide a reliable estimation. There is a lag error. There are two reasons for the lag error here:

- The dynamic model doesn’t fit the case. The dynamic model heats up by 0.25 degrees per unit time, while in reality it’s more 0.5 degrees.

- We have chosen very low process noise , while the true temperature fluctuations are much more significant.

- Another problem is a low estimate uncertainty. The KF failed to provide accurate estimates and is also confident in its estimates. This is an example of a bad KF design.

There are two ways to fix the lag error:

- If we know that the liquid temperature can change linearly, we can define a new model that considers a possible linear change in the liquid temperature. We did this in Example 4. This method is preferred. However, this method won’t improve the Kalman Filter performance if the temperature change can’t be modeled.

- On the other hand, since our model is not well defined, we can adjust the process model reliability by increasing the process noise (). See the next example for details.

Example (Good): Increasing Liquid Temperature

This example is similar to the previous example, with only one change. Since our process is not well-defined, we increase the process variance to .

Iteration 0

Initialization: We don’t know the true temperature of the liquid in a tank, and our guess is

Prediction: Now we predict the next state based on the initialization values

The extrapolated estimate variance:

Results

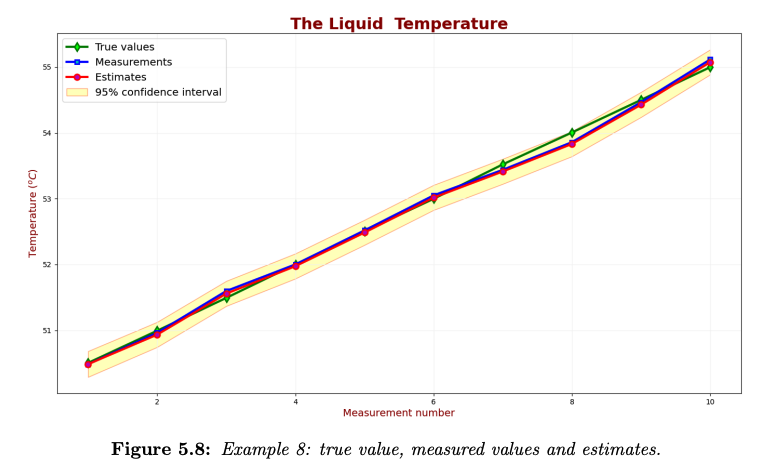

The following chart compares the true value, measured values, and estimates.

As you can see, the estimates following the measurements. There is no lag error.

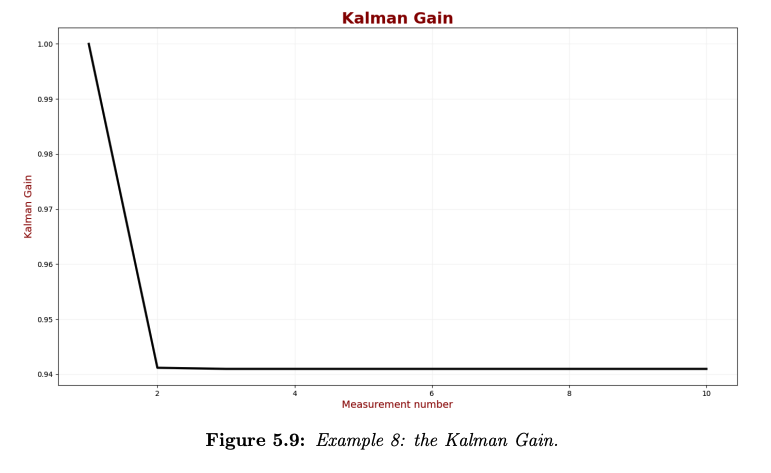

Let’s take a look at the Kalman Gain:

Due to the high process uncertainty, the measurement weight is much higher than the weight of the estimate. Thus, the Kalman Gain is high, and it converges to 0.94.

The good news is we can trust the estimates of this KF. The true values are within the 95% confidence region.zkz