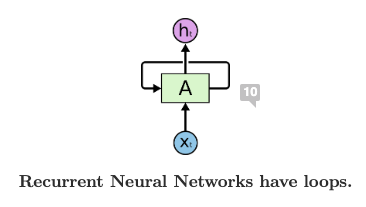

Recurrent neural networks have loops in them, allowing information to persist.

Here, a chunk of neural network , looks at some input and outputs a value . A loop allows information to be passed from one step of the network to the next. In contrast to one-direction feedforward neural networks, it allows the output from some nodes to affect subsequent input to the same nodes.

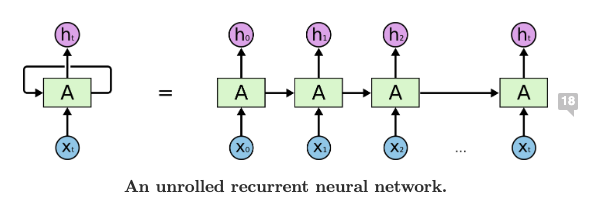

We can “unroll” recurrent neural networks to instead think about them as multiple copies of the same network, each passing a message to a successor:

This chain-like nature makes RNNs ideal for sequences and lists.