Sigmoid Neuron

The activity of a neuron is very low or zero when the input is low, and the activity goes up and approaches some maximum as the input increases. This general behavior can be represented by a few activation functions.

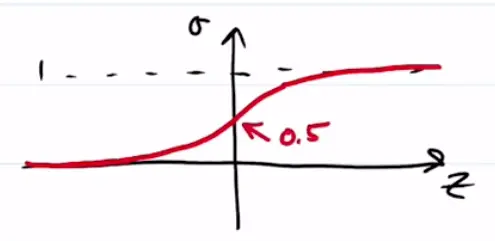

Logistic Curve

Goes from 0 to 1.

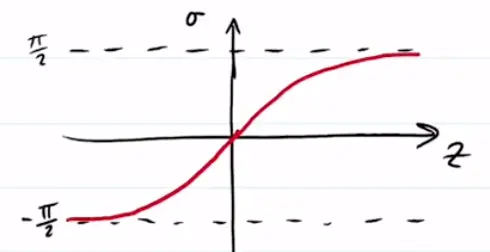

Arctan

Goes from to instead.

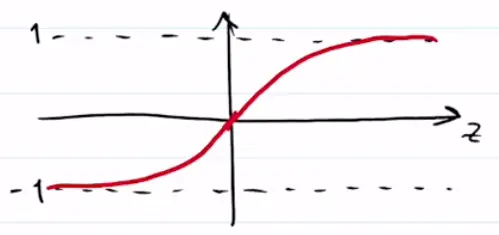

Hyperbolic Tangent

Goes from to .

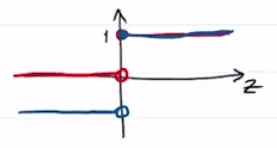

Threshold

This is just a Heaviside function.

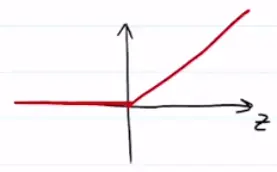

Rectified Linear Unit (ReLU)

This is just a line that gets clipped below at zero:

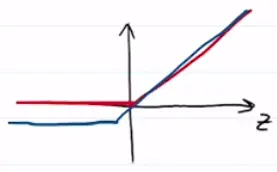

Leaky ReLU is conceptually the same but goes a bit below zero, which can have some advantages

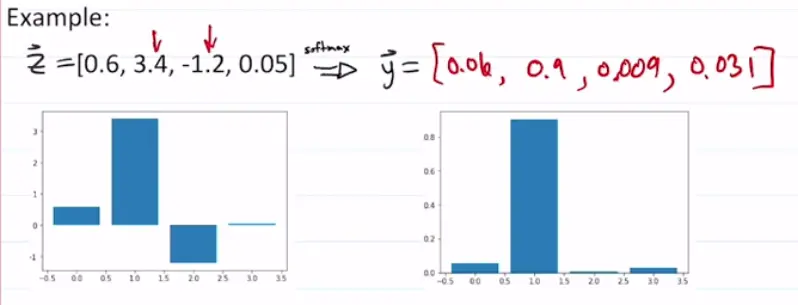

Softmax

Softmax depends on multiple neurons. Softmax is like a probability distribution (or probability vector), so its elements add to . If is the drive (input) to a set of neurons, then:

Then, by definition,

so they create a probability distribution. Thus, we can turn a list of inputs (that are not a distribution) and turn them into a distribution with softmax.

One-Hot

One-Hot is the extreme of the softmax, where only the largest element ramins nonzero, while the others are set to zero. Kind of like taking the limit of the softmax?