Consider this expression:

This expression can be built using the following code:

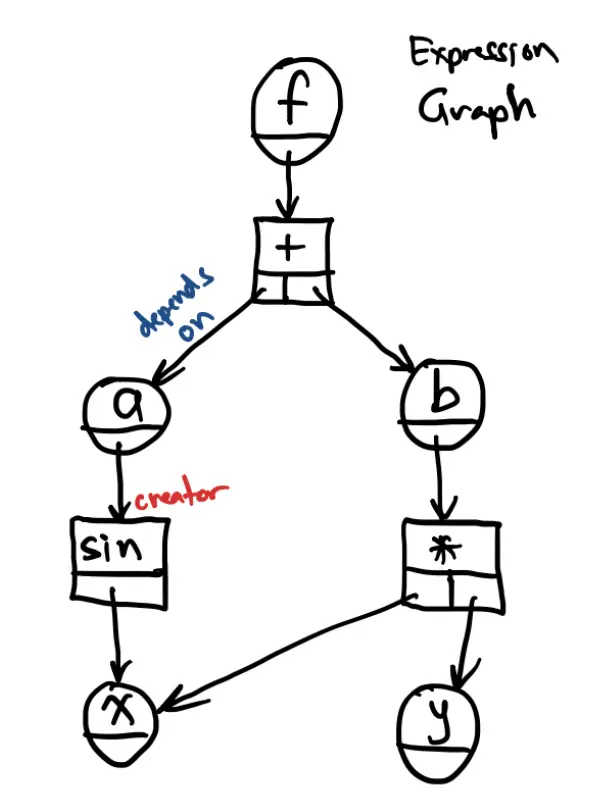

We can visualize this as an expression grass

- Dependences:: Arrows on the graph

- Creators: Indicate which operations generated each variable

We can build a data structure to represent the expression graph using two different types of objects:

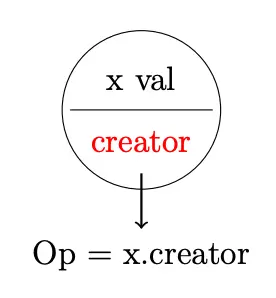

- Var: Stores a value and a creator (which is an Op)

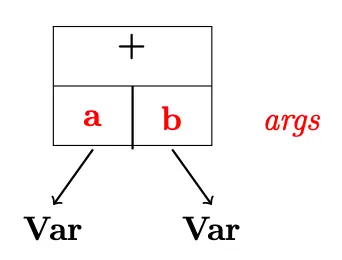

- Op: Takes arguments (which are Vars) and applies an operation

Example

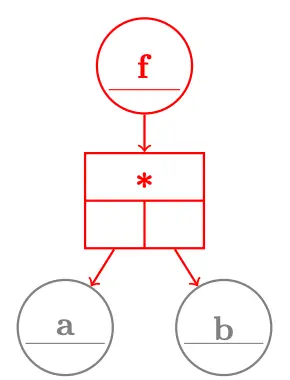

Consider the example .

- Create Op object.

- Save references to args (a, b).

- Create a Var for output f.

- Fet f.val to a.val * b.val

- Set f.creator to this Op.

Differentiation

The expression can also be used to compute derivatives. Each var stores the derivative of the expression with respect to itself (its own argument?). It stores it in its member grad.

Consider:

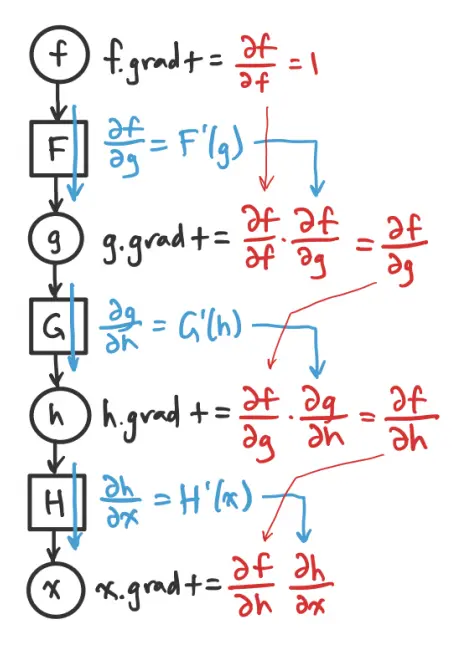

Now we can derive the chain rule. Define .

Here’s an expression graph that can be used to compute the derivatives:

Starting with a value of at the top, we work our way down through the graph, and increment the grad of each Var as we go.

Each Op contributes its factor (according to the chain rule) and passes the updated derivate down the graph.

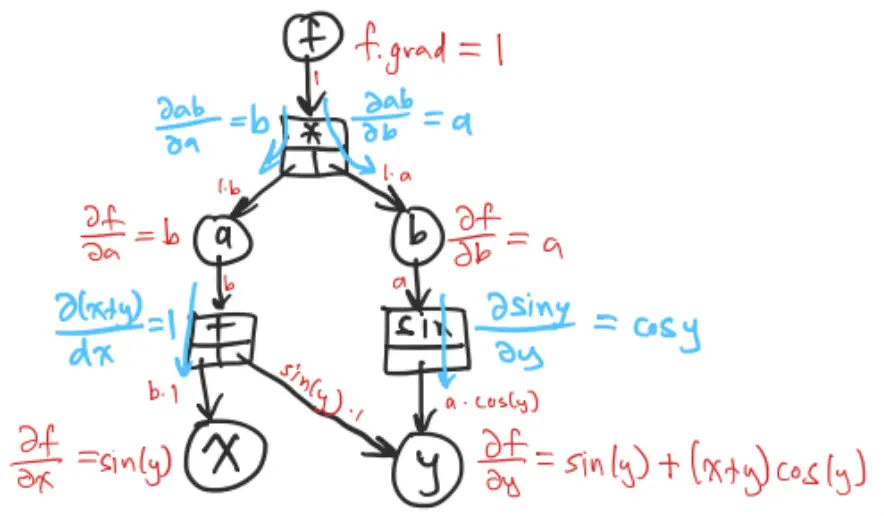

Example

Because of the chain rule, we multiply as we work our way down a branch. We also added whenever multiple branches converge.

Var class backward method:

class Var:

def backward(s):

self.grad += s

self.creator.backward(s)self.val,self.grad,smust all have the same shapesis the upstream gradient

Op class backward method:

class Op:

def backward(s):

for x in self.args:

x.backward (s * ∂(Op)/∂x)smust match the shape of the operation’s output- is the derivative of the operation with respect to

Thus, the chain rule is applied recursively. At each node, the gradient is propagated backward through the graph.