The concept of entropy has origins in physics where it was introduced in the context of equilibrium thermodynamics and later given a deeper interpretation as a measure of disorder through developments in statistical mechanics.

This view of entropy can be understood by considering a set of identical objects that are to be divided amongst a set of bins, such that there are objects in the th bin. Consider the number of different ways of allocating the objects to the bins:

- There are ways to choose the first object

- There are ways to choose the second object, and so on.

- This leads to a total of ways to allocate all objects to the bins.

We don’t want to to distinguish between rearrangements of objects within each bin. In the th bin there are ways of reordering the objects, and so the total number of ways of allocating the objects to the bins is given by:

which is called by multiplicity.

The entropy is then defined as the logarithm of the multiplicity scaled by a constant factor so that

We can then apply Stirling’s approximation of . This gives us:

Some simplifications that we used above:

- , so

This gives us:

Consider the limit , in which the fractions are approach the probabilities of finding an object in the th bin:

The specific allocation of objects into bins is called a microstate. The overall distribution of occupation numbers, expressed by , is called a macrostate.

We can interpret the bins as the states of a discrete random variable , where . The entropy of the random variable is then:

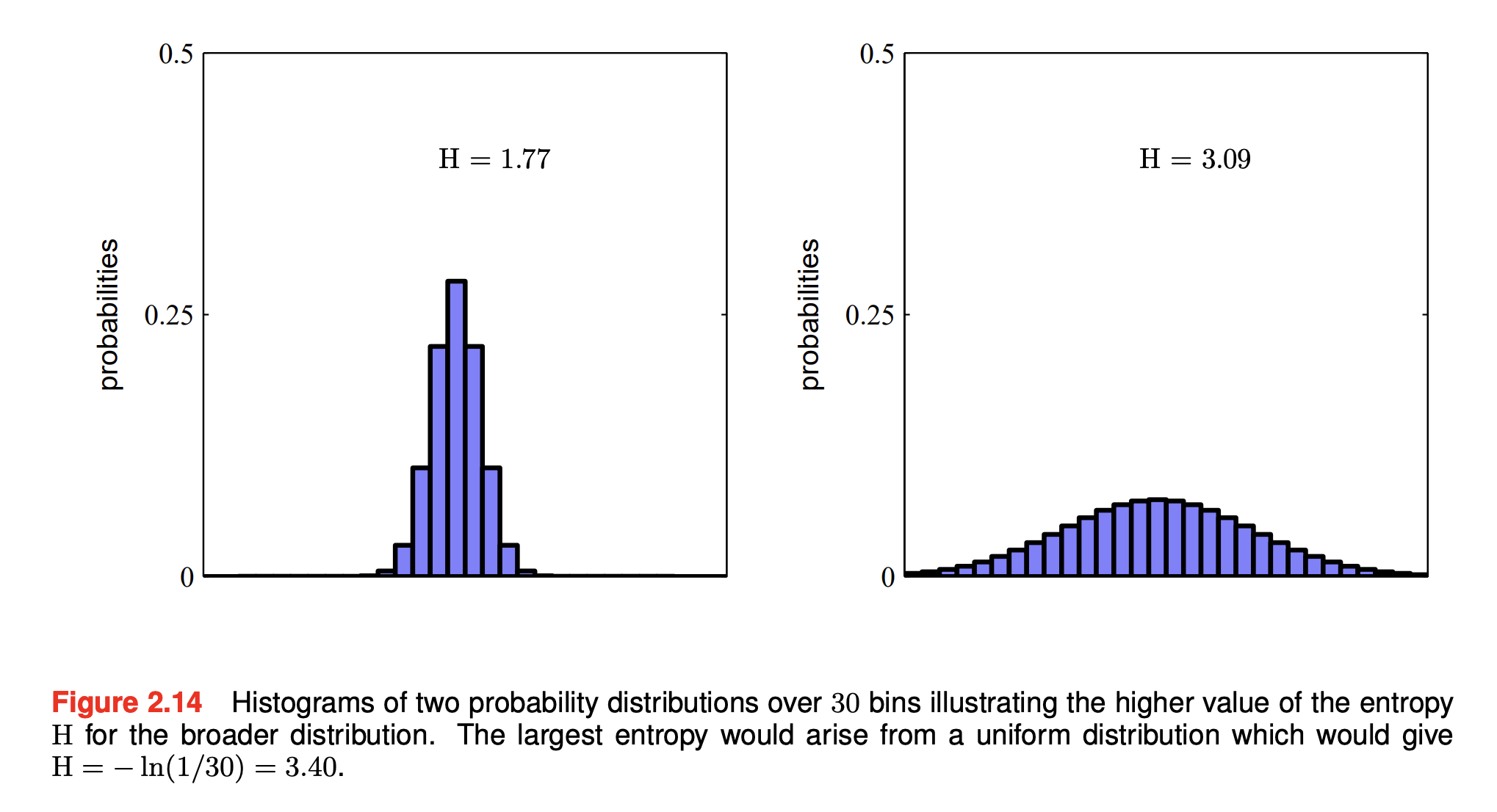

Distributions that are sharply peaked around a few values will have lower entropy, whereas those spread more evenly will have higher entropy. Because , the entropy is non-negative, and it will equal its minimum value of when one of the and all other .