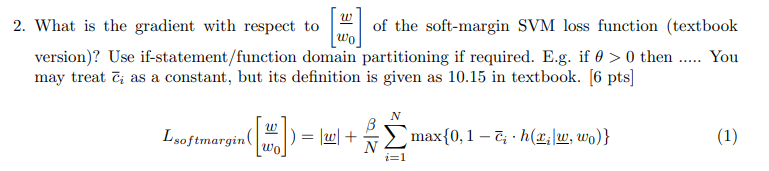

Q2

For the first term, , provided that this is the L2 norm, we have:

For the second term (hinge loss), we need to partition the domain based on whether the term inside the function is positive or not:

- If , then the data point is correctly classified. The function returns , and the gradient is .

- If , then the hinge loss affects the gradient, returning . We need to calculate the gradient of this term.

The gradient of with respect to is:

Assuming is a linear function, such as , the gradient of with respect to is:

Combining the two steps above, the gradient of hinge loss for case 2 is just .

Combining the two cases, we can write the gradient of the 2nd term as:

where is an indicator function that returns if the condition inside the brackets is true, and returns otherwise.

Combining everything, we have:

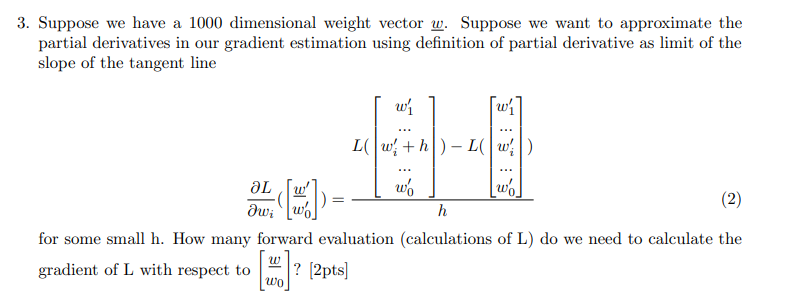

Q3

The given formula provides a numerical approximation of the partial derivative using the definition of partial derivative as the limit of the slope of the tangent line. It calculates the difference in the value of when is slightly perturbed by , divided by .

To calculate this approximation for each dimension of the 1000-dimensional weight vector , we need to:

- Evaluate once with the original weight vector

- For each dimension from to :

- Create a perturbed weight vector by adding to .

- Evaluate with this perturbed weight vector.

Therefore, we need:

- evaluation of with the original weight vector

- evaluations of for each perturbed weight vector

So, we have a total of forward evaluations.